Machine learning is getting lots of attention in the maker community, expanding outward from the realms of academia and industry and making its way into DIY projects. With traditional programming you explicitly tell a computer what it needs to do using code; with machine learning the computer finds its own solution to a problem, based on examples you’ve shown it. You can use machine learning to work with complex datasets that would be very difficult to hard-code, and the computer can find connections you might miss!

How Machine Learning Works

How does machine learning (ML) actually work? Let’s use the classic example: training a machine to recognize the difference between pictures of cats and dogs. Imagine that a small child, with an adult, is looking at a book full of pictures of cats and dogs. Every time the child sees a cat or a dog, the adult points at it and tells them what it is, and every time the child calls a cat a dog, or vice versa, the adult corrects the mistake. Eventually the child learns to recognize the differences between the two animals.

In the same way, machine learning starts off by giving a computer lots of data. The computer looks very carefully at that data and tries to model the patterns. This is called training. There are three ways to approach this process. The picture book example, where the adult is correcting the child’s mistakes, is similar to supervised machine learning. If the child is asked to sort the pages of their book into two piles based on differences that they notice themselves — with no help from an adult — that would be more like unsupervised machine learning. The third style is reinforcement training — the child receives no help from the adult but instead gets a delicious cookie every time they get the answer correct.

Once this training process is complete the child should have a good idea of what a cat is and what a dog is. They will be able to use this knowledge to identify all sorts of cats and dogs — even breeds, colors, shapes, and sizes that were not shown in the picture book. In the same way, once a computer has figured out patterns in one set of data, it can use those patterns to create a model and attempt to recognize and categorize new data. This is called inference.

Moving to Microcontrollers

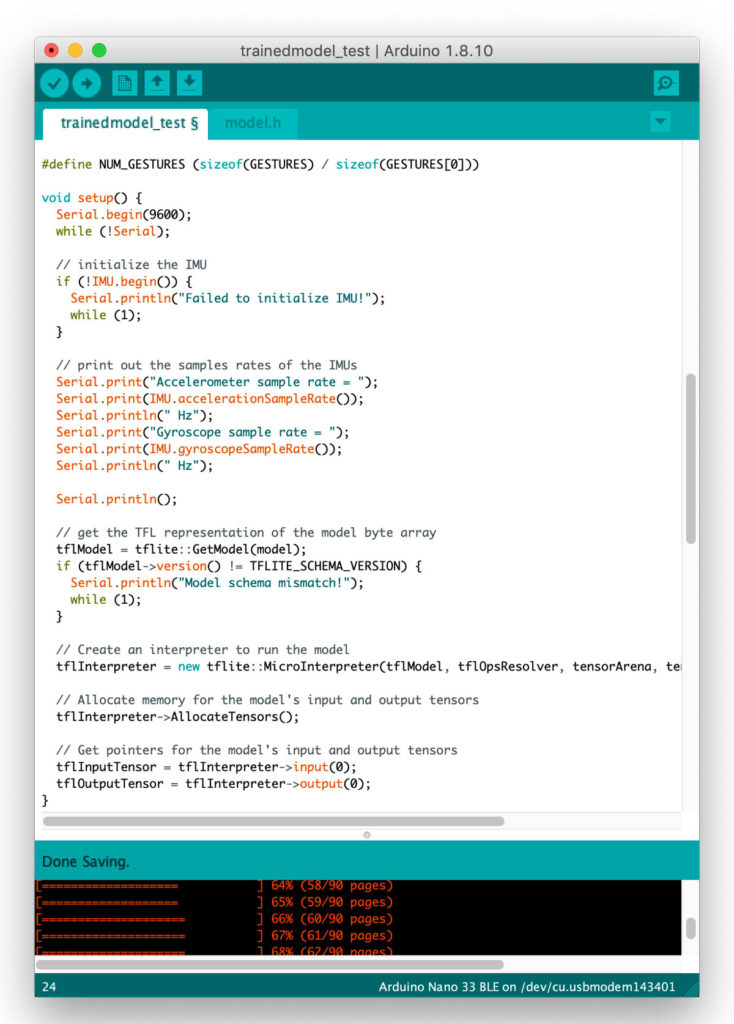

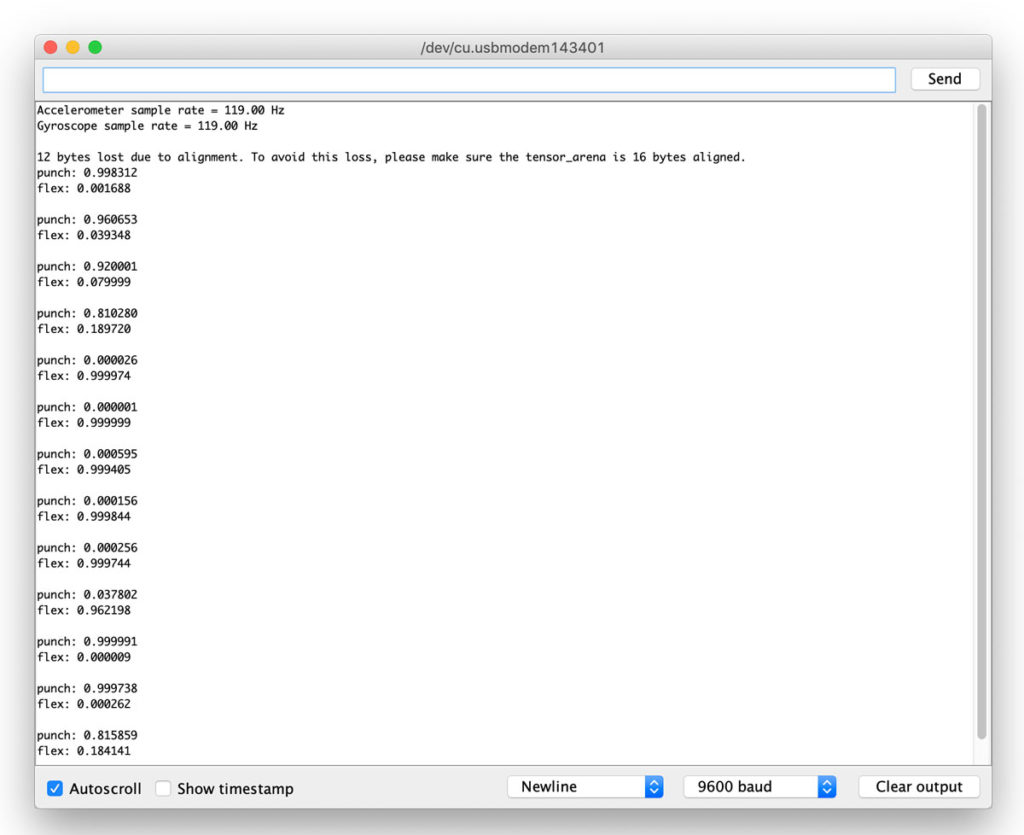

ML training requires more computing power than microcontrollers can give. However, increasingly powerful hardware and slimmed-down software lets us now run inference using models on MCUs after they’re trained. TinyML is the new field of running ML on microcontrollers, using software technologies such as TensorFlow Lite.

There are huge advantages to running ML models on small, battery-powered boards that don’t need to be connected to the internet. Not only does this make the technology much more accessible, but devices no longer have to send your private data (such as video or sound) to the cloud to be analyzed. This is a big plus for privacy and being able to control your own information.

Bias Beware

Of course, there are things to be cautious about. People like to think computer programs are objective, but machine learning still relies on the data we give it, meaning that our technology is learning from our own, very human, biases.

I spoke to Dominic Pajak from Arduino about the ethics of ML. “When we educate people about these new tools we need to be clear about both their potential and their shortcomings,” Pajak said. “Machine learning relies on training data to determine its behavior, and so bias in the data will skew that behavior. It is still very much our responsibility to avoid these biases.”

Joy Buolamwini’s Algorithmic Justice League is battling bias in AI and ML; they helped persuade Amazon, IBM, and Microsoft to put a hold on facial recognition. Another interesting initiative is Responsible AI Licenses (RAIL), which restrict AI and ML from being used in harmful applications.

Maker-Friendly Machine Learning

There’s also a lot of work to do to make ML more accessible to makers and developers, including creating tools and documentation. Edge Impulse is the exciting new tool in this space, an online development platform that allows makers to easily create their own ML models without needing to understand the complicated details of libraries such as TensorFlow or PyTorch. Edge Impulse lets you use pre-built datasets, train new models in the cloud, explore your data using some very shiny visualization tools, and collect data using your mobile phone.

When choosing a board to use for your ML experiments, be wary of any marketing hype that claims a board has special AI capabilities. Outside of true AI-optimized hardware such as Google TPU or Nvidia Jetson boards, almost all microcontrollers are capable of running tiny ML and AI algorithms, it’s just a question of memory and processor speed. I’ve seen the “AI” moniker applied to boards with an ARM Cortex-M4, but really any Cortex-M4 board can run TinyML well.

Shawn Hymel is my go-to expert for machine learning on microcontrollers. His accessible and fun YouTube videos got me going with my own experiments. I asked him what’s on his checklist for an ML microcontroller: “I like to have at least a 32-bit processor running at 80MHz with 50kB of RAM and 100kB of flash to start doing anything useful with machine learning,” Hymel told me. “The specs are obviously negotiable, depending on what you need to do: accelerometer anomaly detection requires less processing power than voice recognition, which in turn requires less processing power than vision object detection.”

The two ML-ready boards I’ve had the most fun with are Adafruit’s EdgeBadge and the Arduino Nano 33 BLE Sense. The EdgeBadge is a credit card-sized badge that supports TensorFlow Lite. It has all the bells and whistles you could hope for: an onboard microphone, a color TFT display, an accelerometer, a light sensor, a buzzer and, of course, NeoPixel blinkenlights.

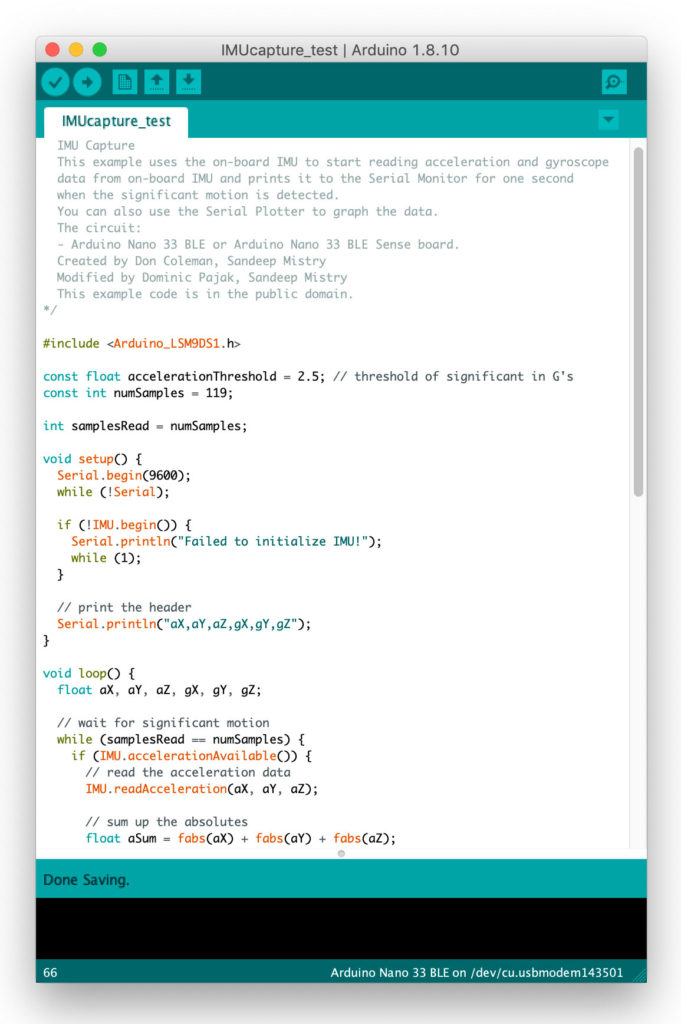

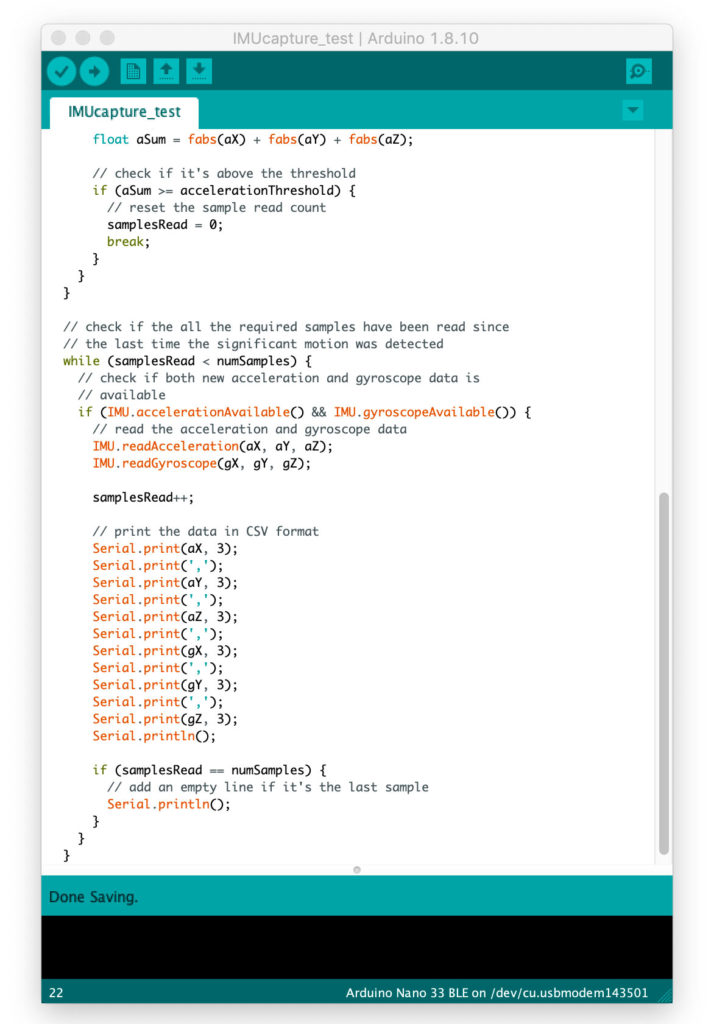

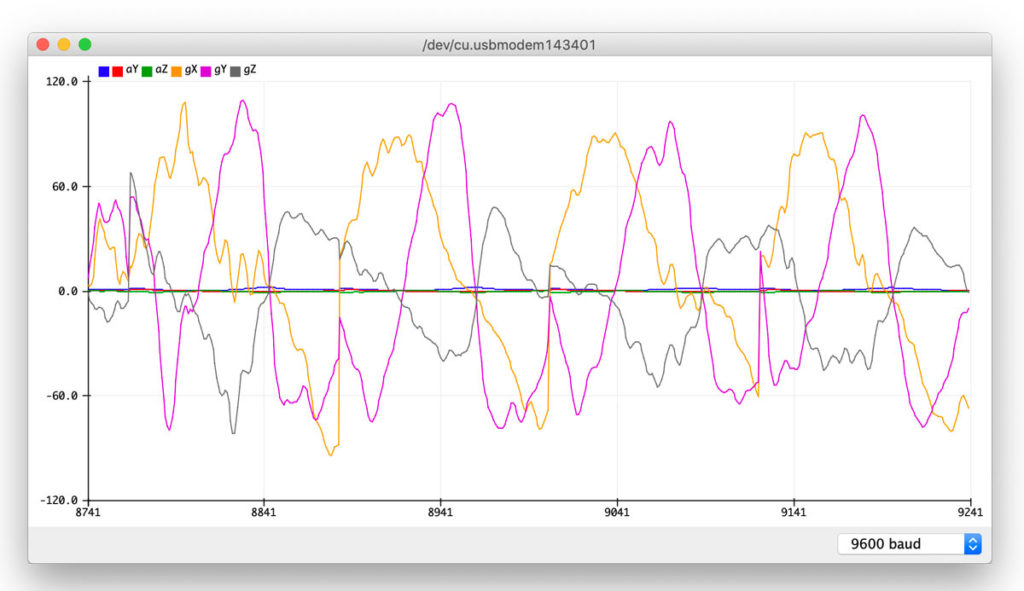

Here I’ll take you through the basics of how to sense gesture with an Arduino Nano 33 BLE Sense using TinyML. I also recommend Andrew Ng’s videos on Coursera and Shawn Hymel’s excellent YouTube series on machine learning. You can take a TinyML course on EdX that features Pete Warden from Google, who coined the term and also authored a fantastic book on the subject. For keeping up-to-date on the latest developments, seek out Alasdair Allan’s interesting and informative articles and blog posts on embedded ML; you can find him on Twitter and Medium @aallan.

Project: Recognize Gestures Using Machine Learning on an Arduino

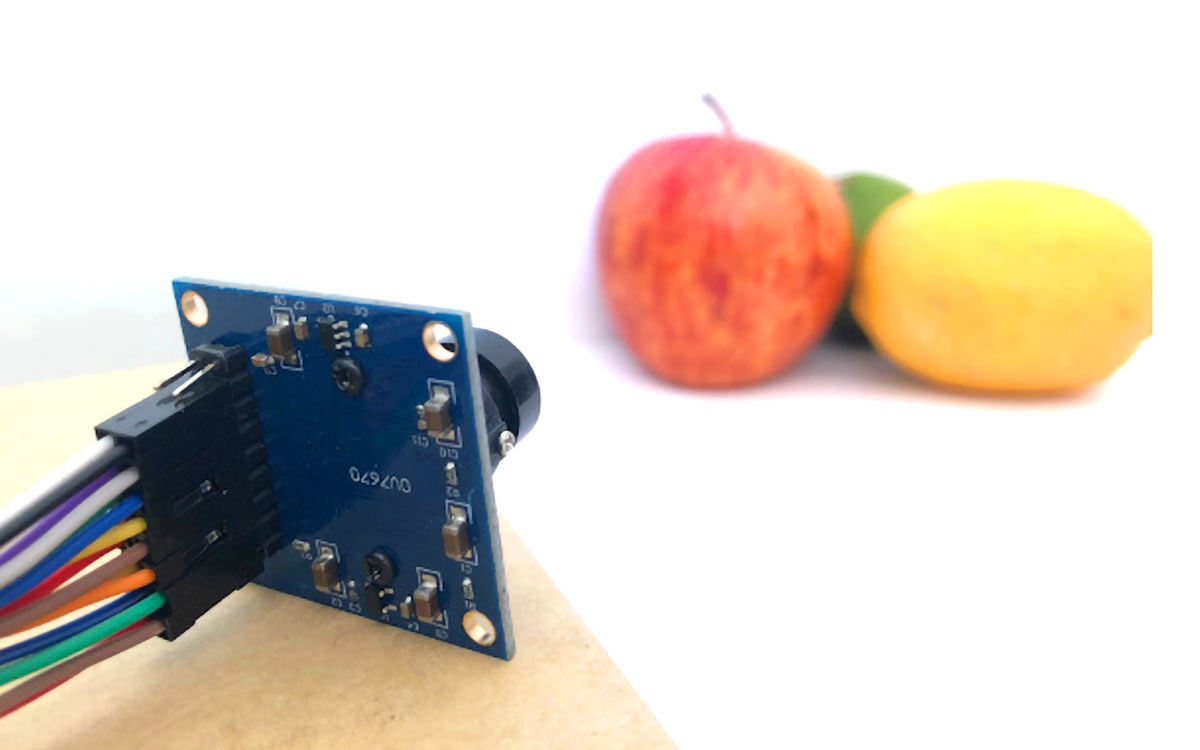

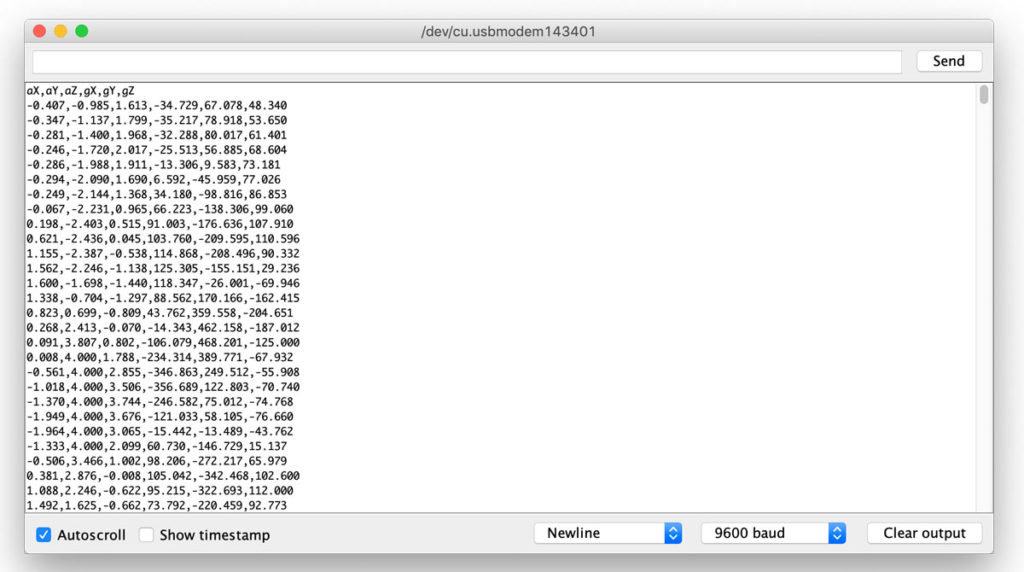

Since I got my hands on the Arduino Nano 33 BLE Sense at Maker Faire Rome last year, this little board has fast become one of my favorite Arduino options (Figure A). It uses a high-performance Cortex-M4 microcontroller — great for TinyML — and loads of onboard sensors including motion, color, proximity, and a microphone. The cute little Nano form factor means it works really well for compact or wearable projects too. Let’s take a look at how to use this board with TinyML to recognize gestures.

Tiny ML in the Wild: Machine Learning Projects From the Community

CORVID-19: Crow Paparazzo

by Stephanie Nemeth

The longing for interaction during the Covid-19 lockdown inspired Stephanie Nemeth, a software engineer at Github, to create a project that would capture images of the friendly crow that regularly visited her window and then share them with the world. Her project uses a Raspberry Pi 4, a PIR sensor, a Pi camera, Node.js and TensorFlow.js. She used Google’s Teachable Machine to train an image classification model in the browser on photos of the crow. In the beginning, she had to continually train the model with the newly captured photos so it could recognize the crow. A PIR sensor detects motion and triggers the Pi camera. The resulting photos are then run through the trained model, and, if the friendly corvid is recognized, the photos are tweeted to the crow’s account using the Twitter API. Find the crow on Twitter @orvillethecrow or find Stephanie @stephaniecodes.

Nintendo Voice Hack

by Shawn Hymel

Embedded engineer and content creator Shawn Hymel imagined a new style of video game controller that requires players to yell directions or names of special moves. He modified a Super Nintendo (SNES Classic Edition) controller to respond to the famous Street Fighter II phrase hadouken. As a proof-of-concept, he trained a neural network to recognize the spoken word “hadouken!” then he loaded the trained model onto an Adafruit Feather STM32F405 Express, which uses TensorFlow Lite for Microcontrollers to listen for the keyword via MEMS microphone. Upon hearing the keyword, the controller automatically presses its buttons in the pattern necessary to perform the move in the video game, rewarding the player with a bright ball of energy without the need to remember the exact button combination. Shawn has a highly entertaining series of videos explaining how he made this project — and other machine learning projects — on Digi-Key’s YouTube channel. You can also find him on Twitter @ShawnHymel.

Constellation Dress

by Kitty Yeung

Kitty Yeung is a physicist who works in quantum computing at Microsoft. She also makes beautiful science-inspired clothing and accessories, including a dress that uses ML to recognize her gestures and display corresponding star constellations. She uses a pattern-matching engine, an accelerometer, an Arduino 101, and LEDs arranged in configurations of four constellations: the Big Dipper (Ursa Major), Cassiopeia, Cygna, and Orion. Yeung trained the pattern-matching engine to memorize her gestures, detected by the accelerometer, to map to the four constellations. To learn about the project or see more of her handmade and 3D-printed clothing, check out her website kittyyeung.com or find her on Twitter @KittyArtPhysics.

Worm Bot

by Nathan Griffith

Artificial neural networks can be taught to navigate a variety of problems, but the Nematoduino robot takes a different approach: emulating nature. Using a small-footprint emulation of the C. elegans nematode’s nervous system, this project aims to provide a framework for creating simple, organically derived “bump-and-turn” robots on a variety of low-cost Arduino-compatible boards. Nematoduino was created by astrophysicist and tinkerer Nathan Griffith, using research by the OpenWorm project, and an earlier Python-based implementation as a starting point. You can find a write up of his wriggly robot project on the Arduino Project Hub by searching for “nematoduino” and you can find Griffith on Twitter @ComradeRobot.