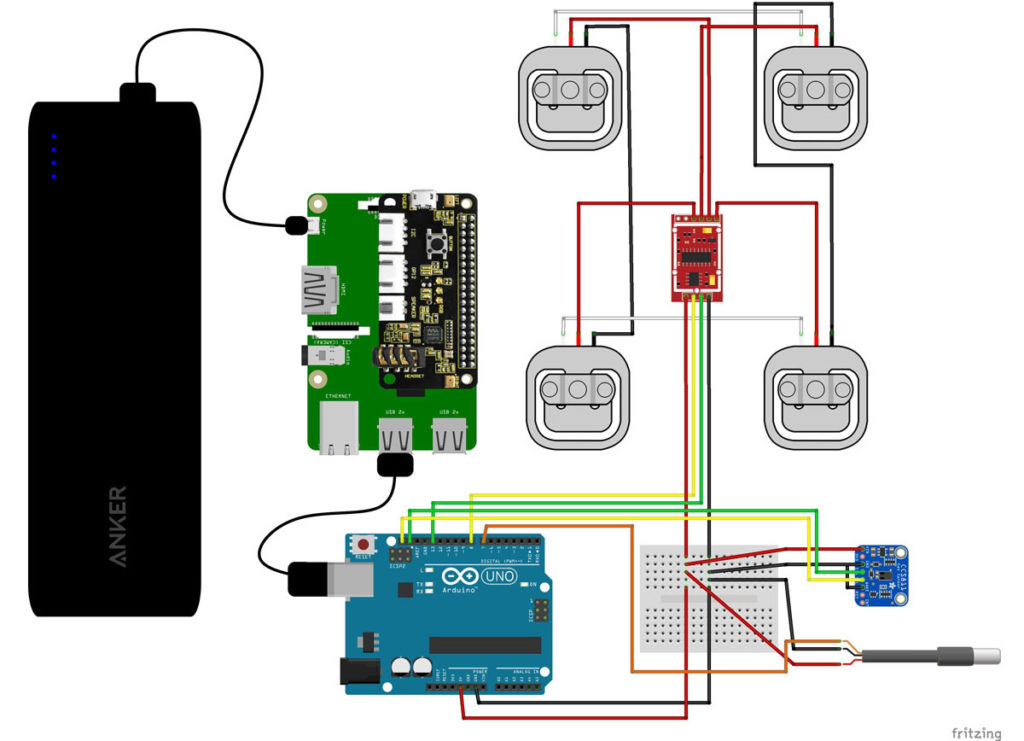

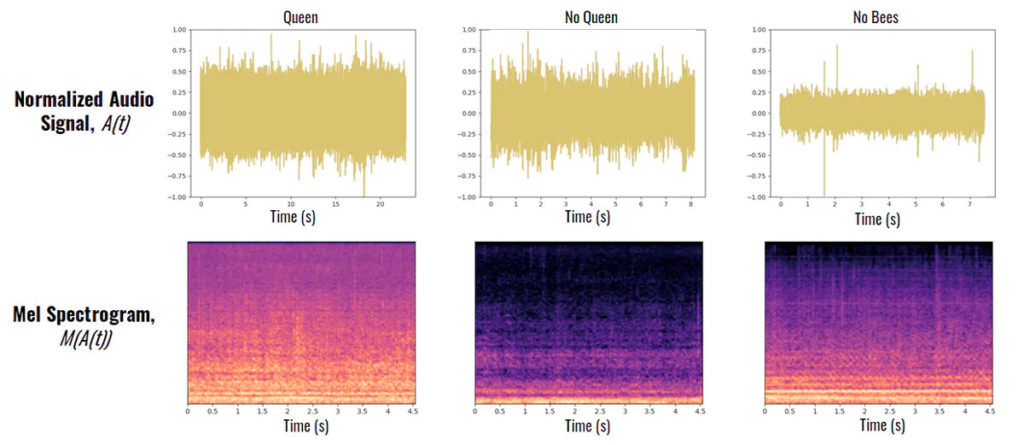

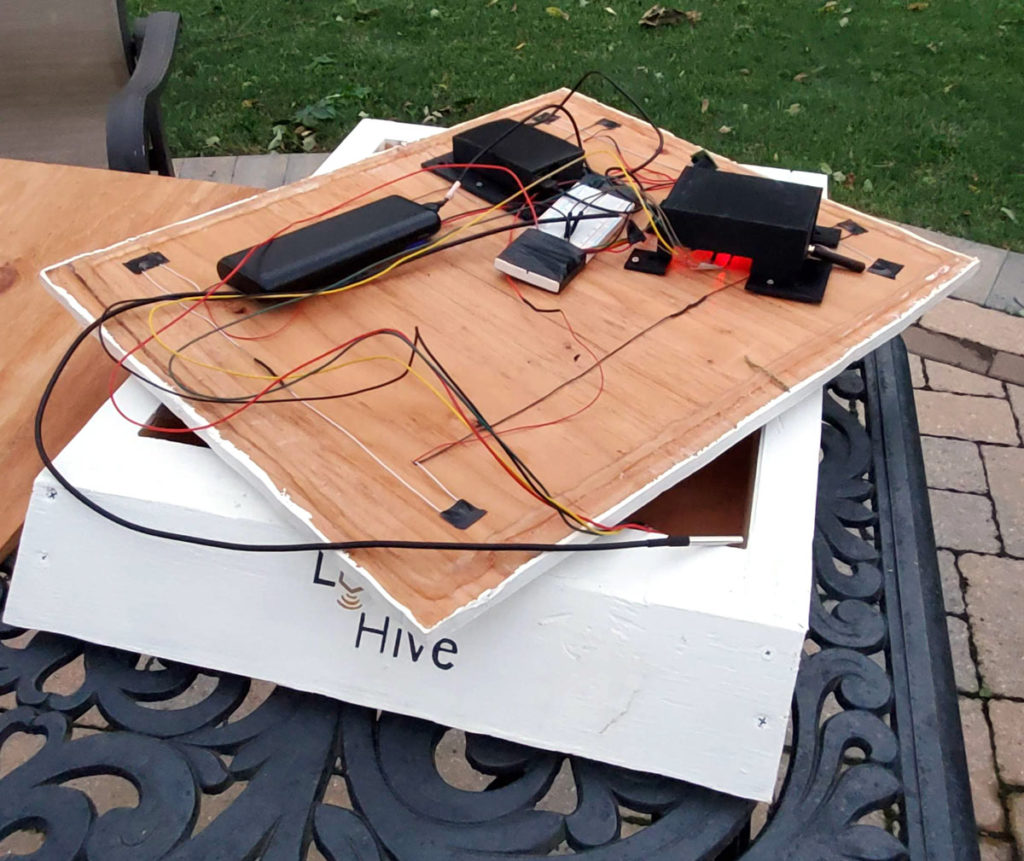

Whether you’re an individual hobbyist or a commercial farmer who relies on large-scale pollination, monitoring your hive with simple sensory data can help beekeepers detect problematic trends in colony health. Our project, known as LongHive, is a full-service infrastructure for beehive maintenance, enabled by deep learning (DL) and LoRaWAN communications via the Helium Network. Data-driven beekeepers can install our LongHive system underneath their standard beehives and take advantage of its suite of sensors, pre-trained convolutional neural network (CNN) for classifying the hive’s acoustic signatures, and web-based dashboard for easy visualization of the transient signals.

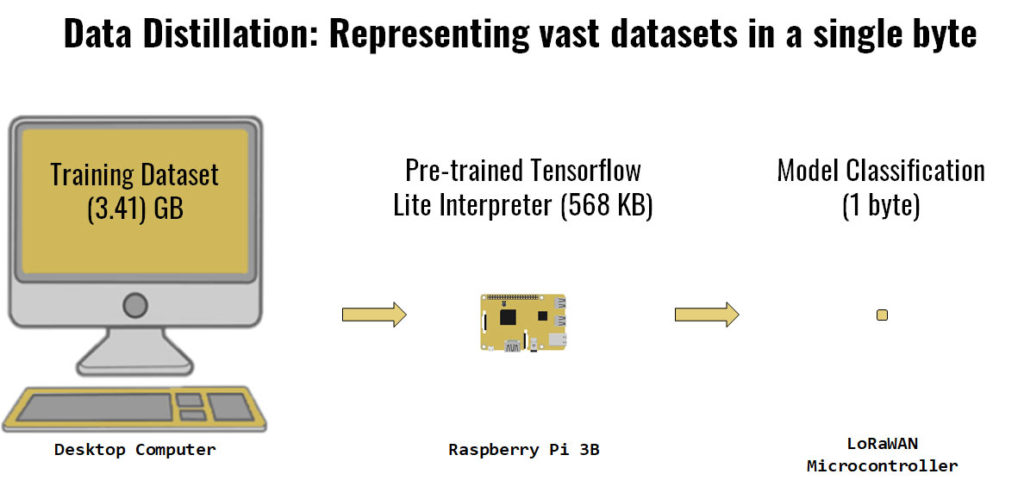

Our goal is to help beekeepers make the most out of their time and reduce the frequency of intrusive hive inspections while still detecting problems within the hive. As opposed to limited-range, power-hungry protocols like Wi-Fi or Bluetooth, the Helium Network’s LongFi architecture enables low-power LoRaWAN devices that can operate in a much more remote environment. We combine edge computing and a pre-trained neural network to circumvent the most glaring constraint of LoRaWAN networks — low transaction throughput — in our DL classifier. A Raspberry Pi bears the computational burden locally, so only the network output (the classification itself) needs to be transmitted over LongFi.