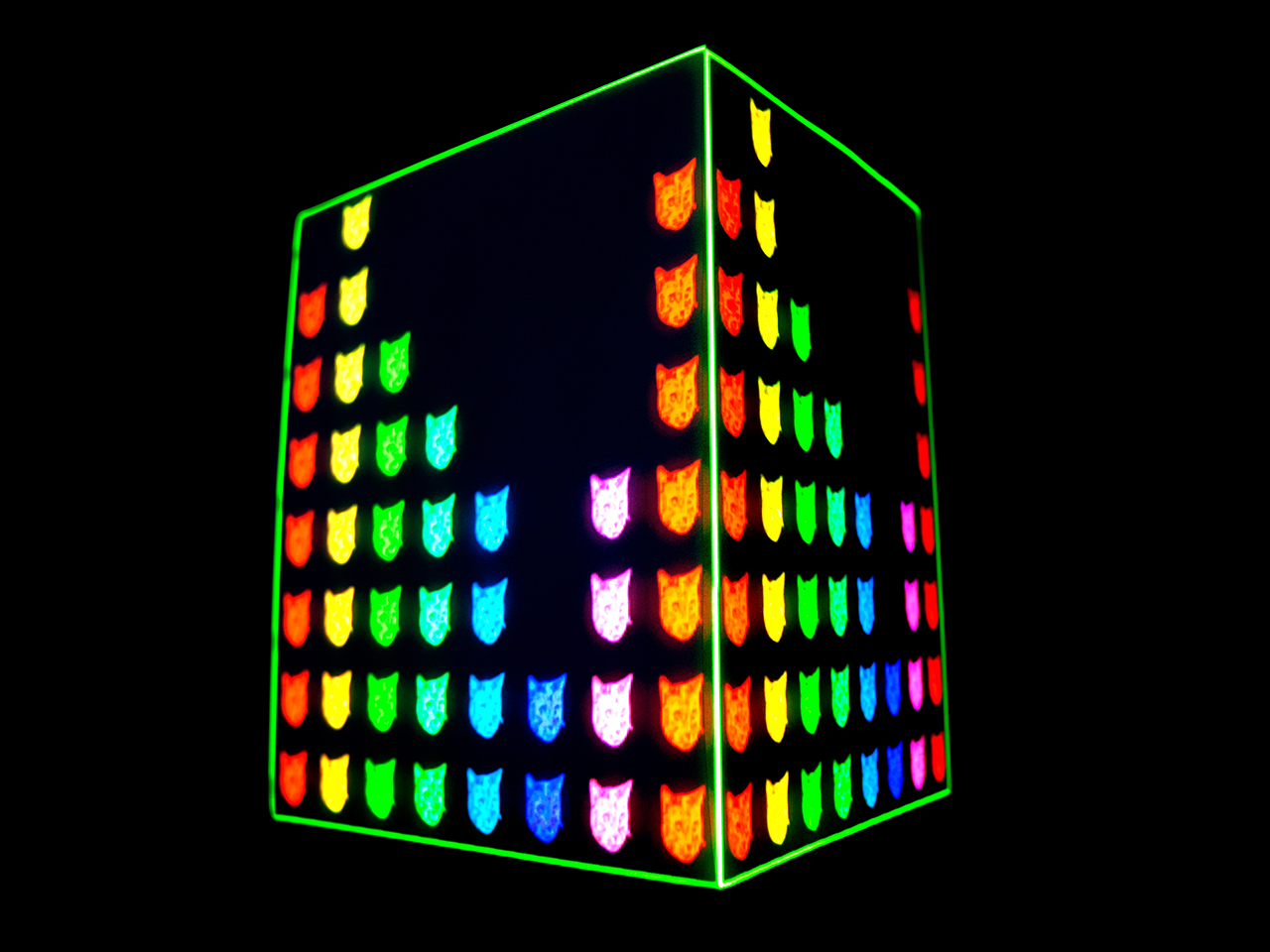

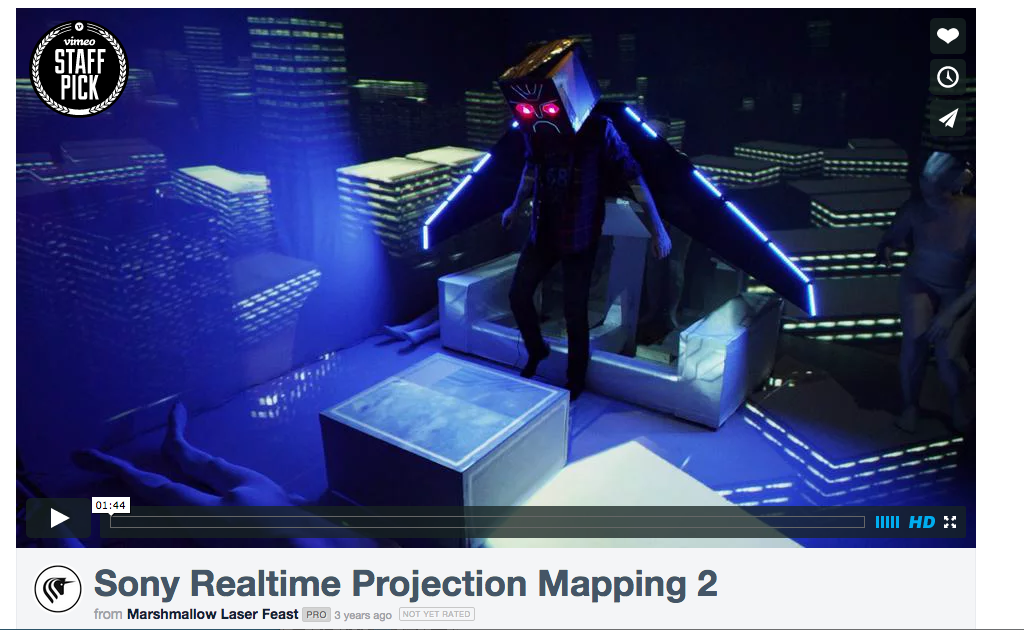

Projection mapping is nearly everywhere these days from advertising campaigns to huge stage shows. This isn’t really a surprise. If done well, it can immediately transform a space into something unreal.

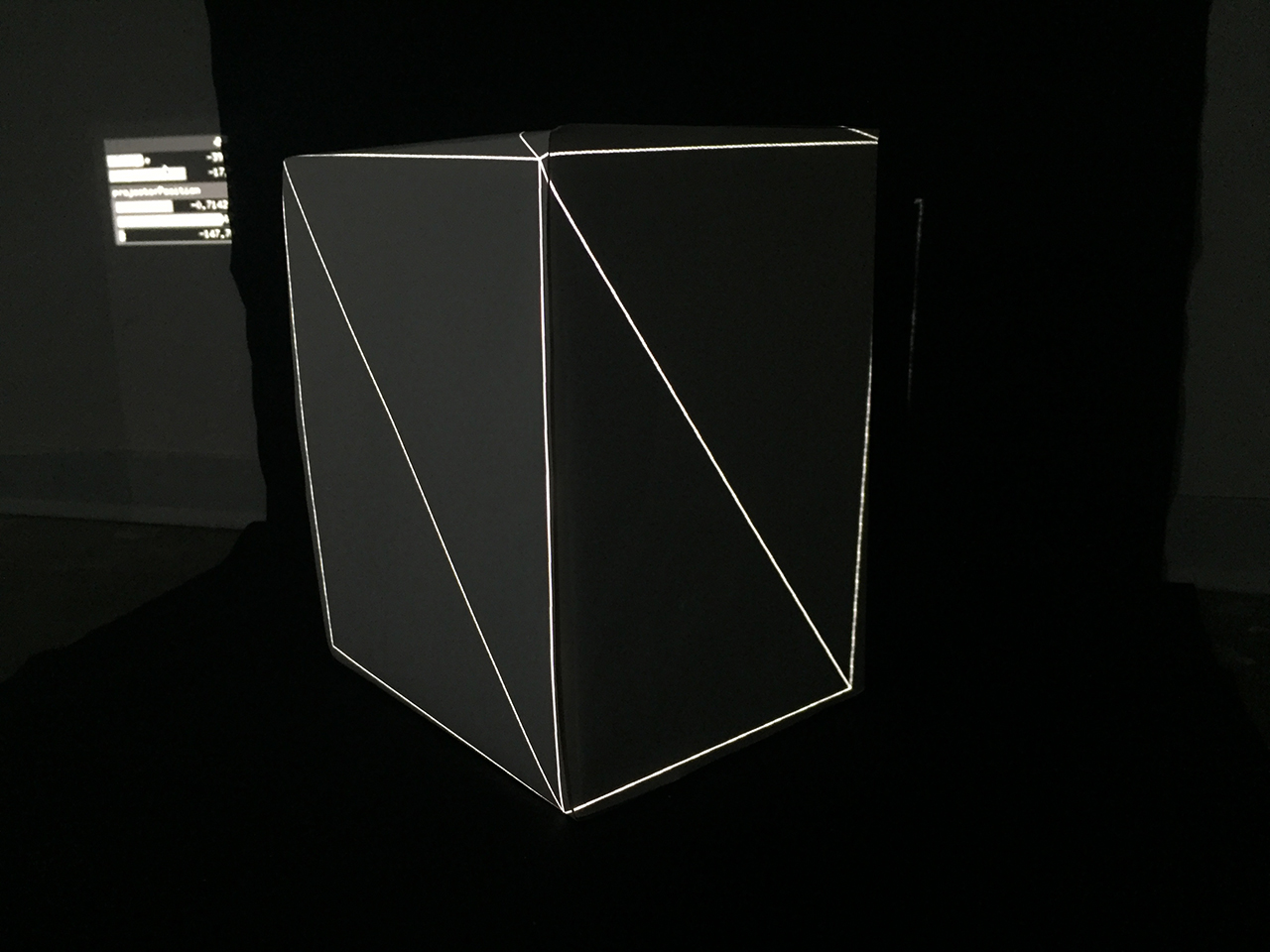

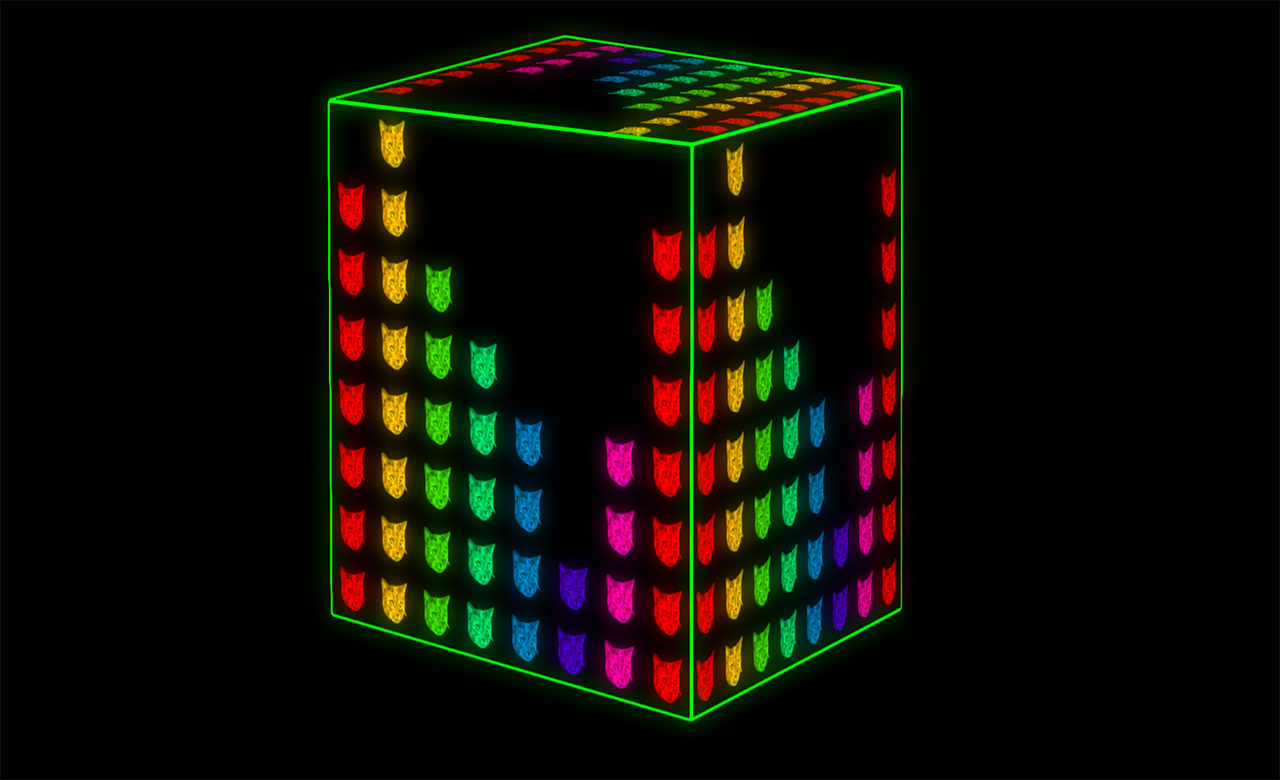

Before we begin, here are a few recent examples to whet your appetite: The Box combines projection mapping with robotics, to map moving canvases. Omicron is a permanent large scale projection mapping of a beautifully architected 65 meter dome. One Degree of Freedom is my own example of interactive projection mapping using a sensor to track the movement of the mapped object.

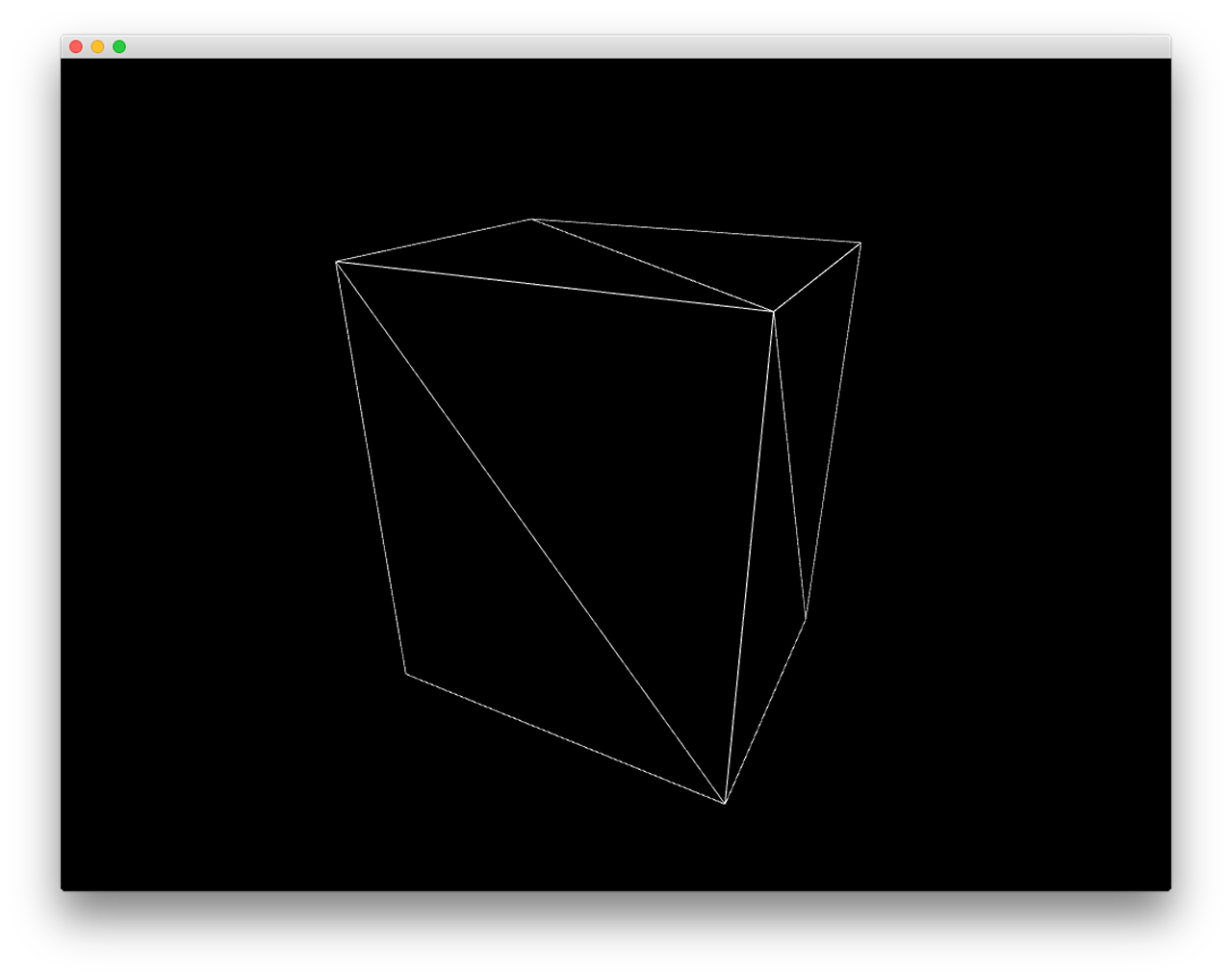

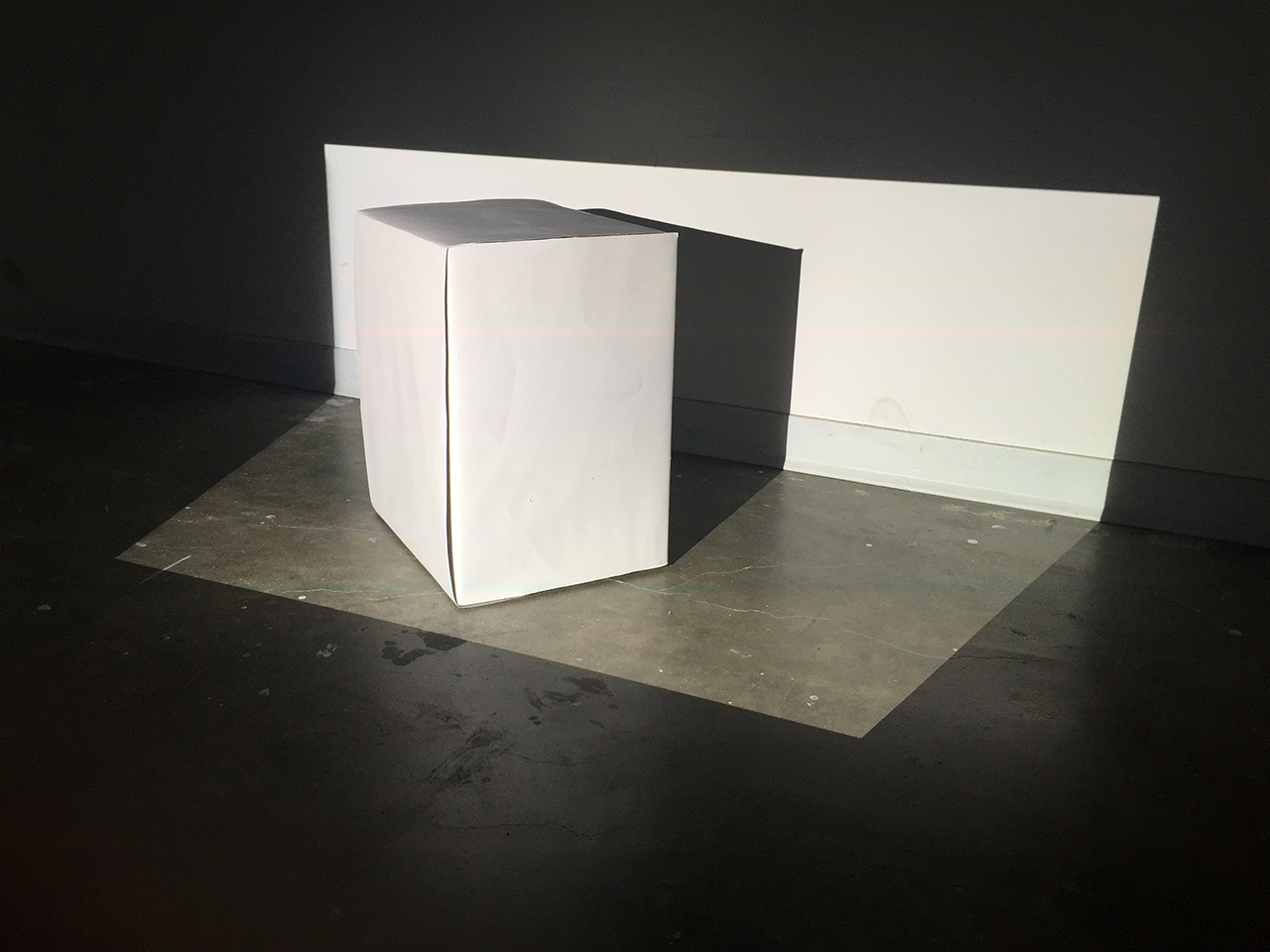

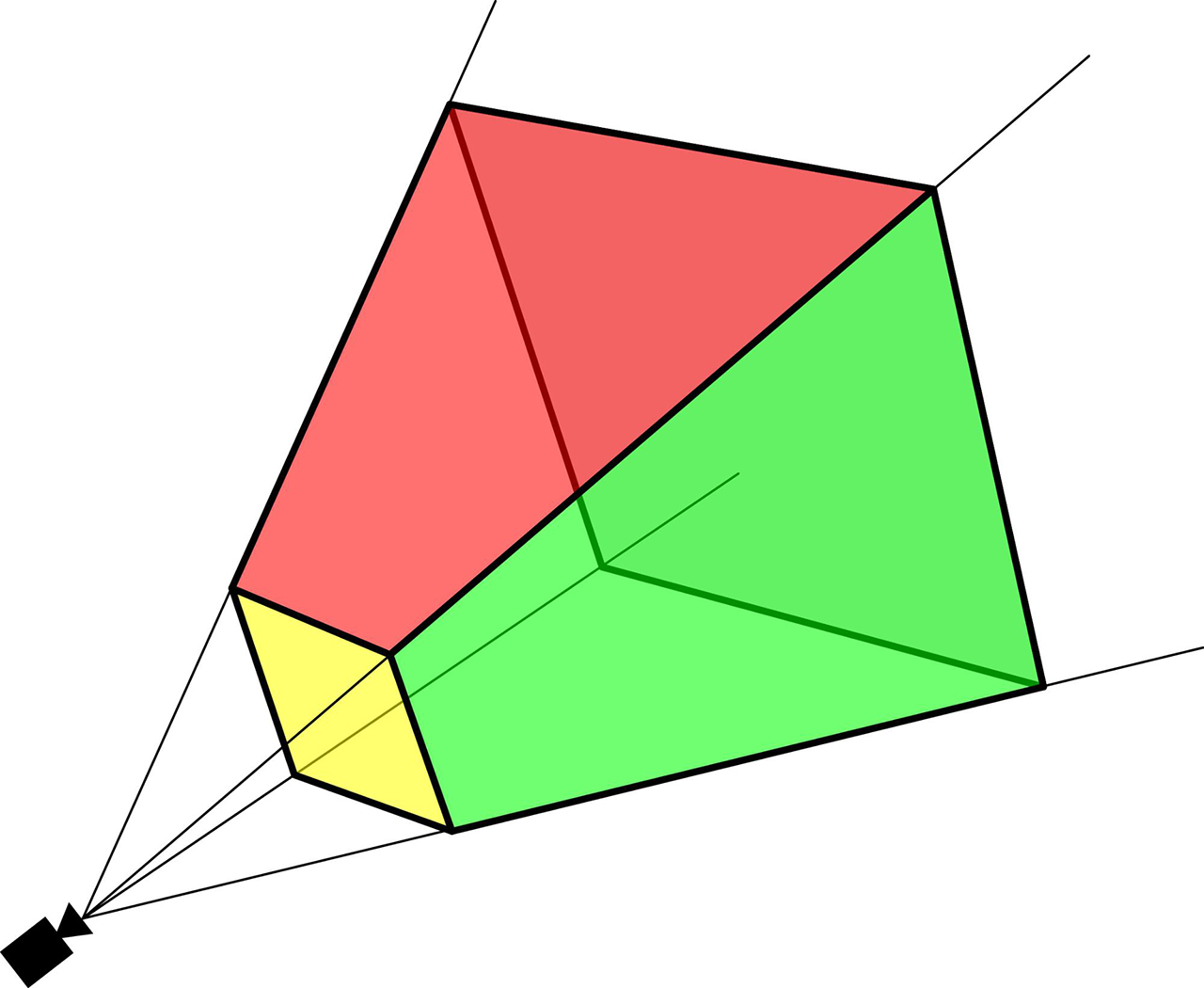

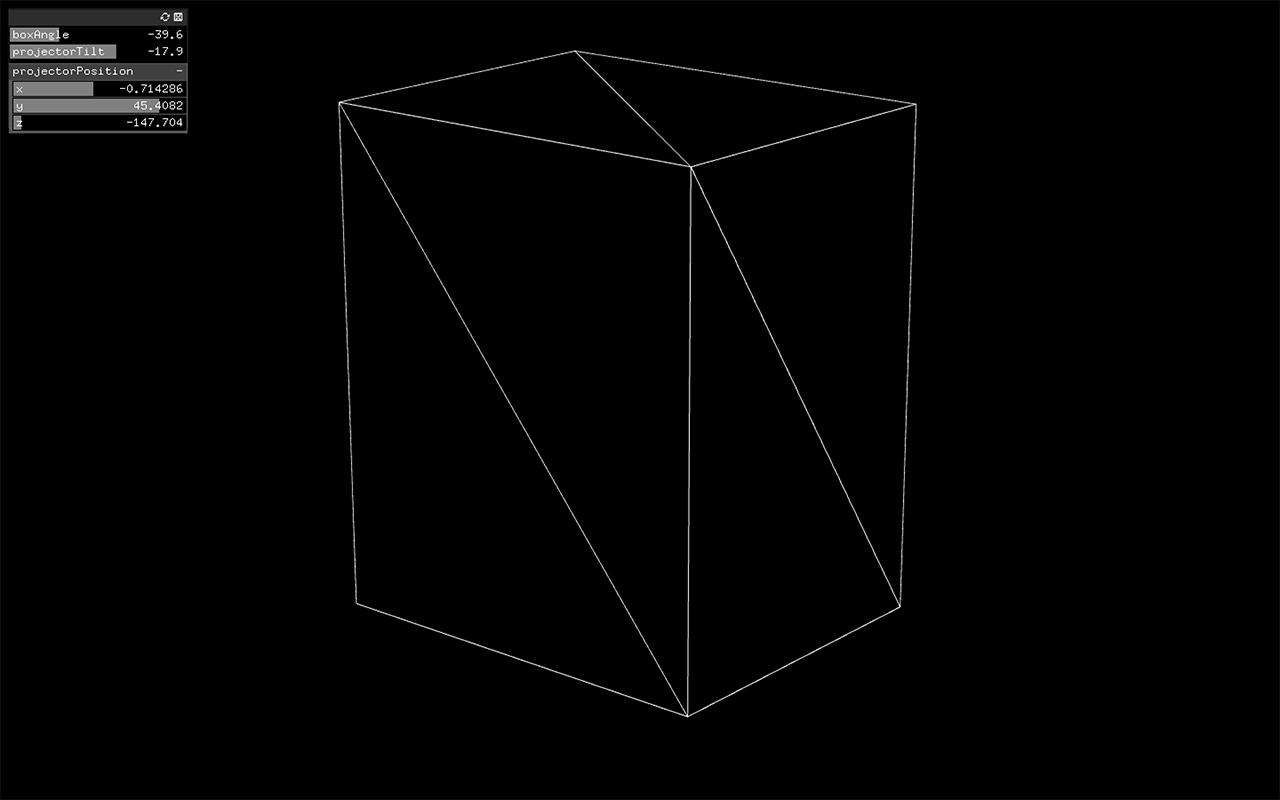

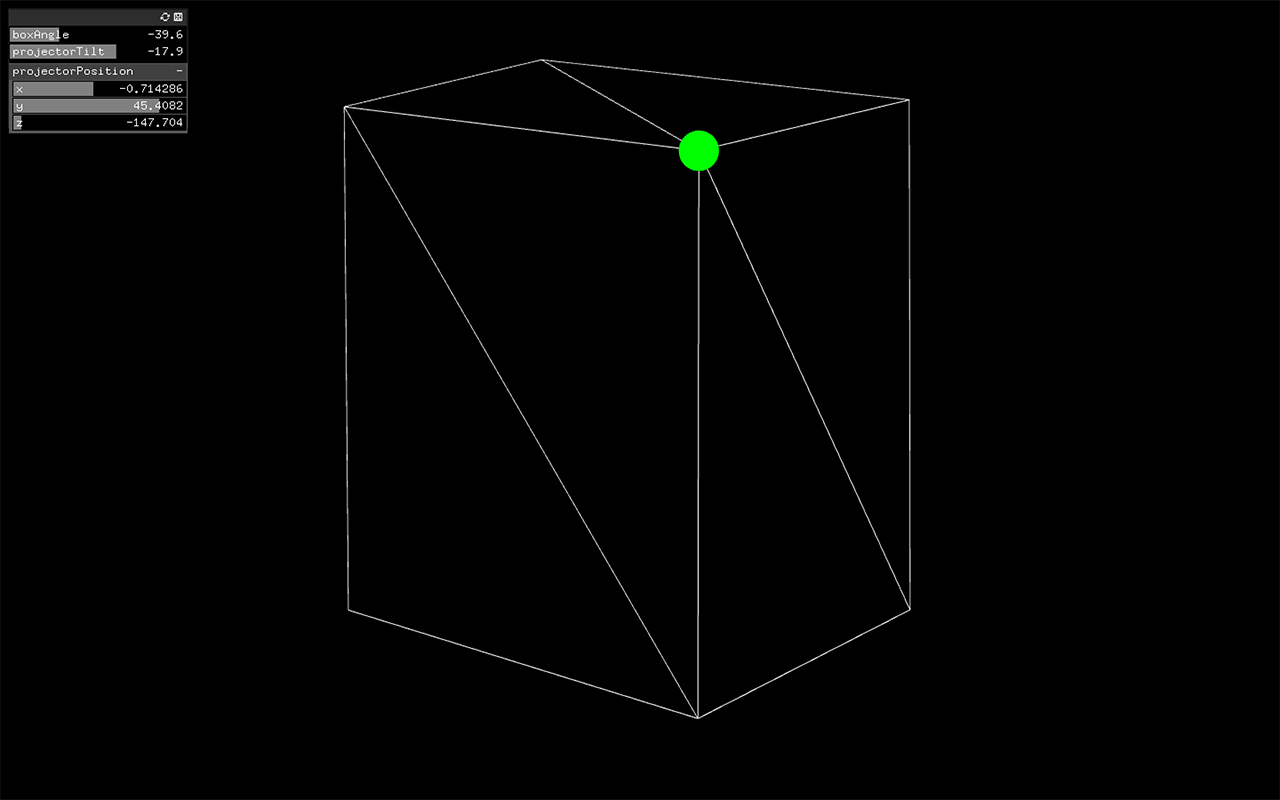

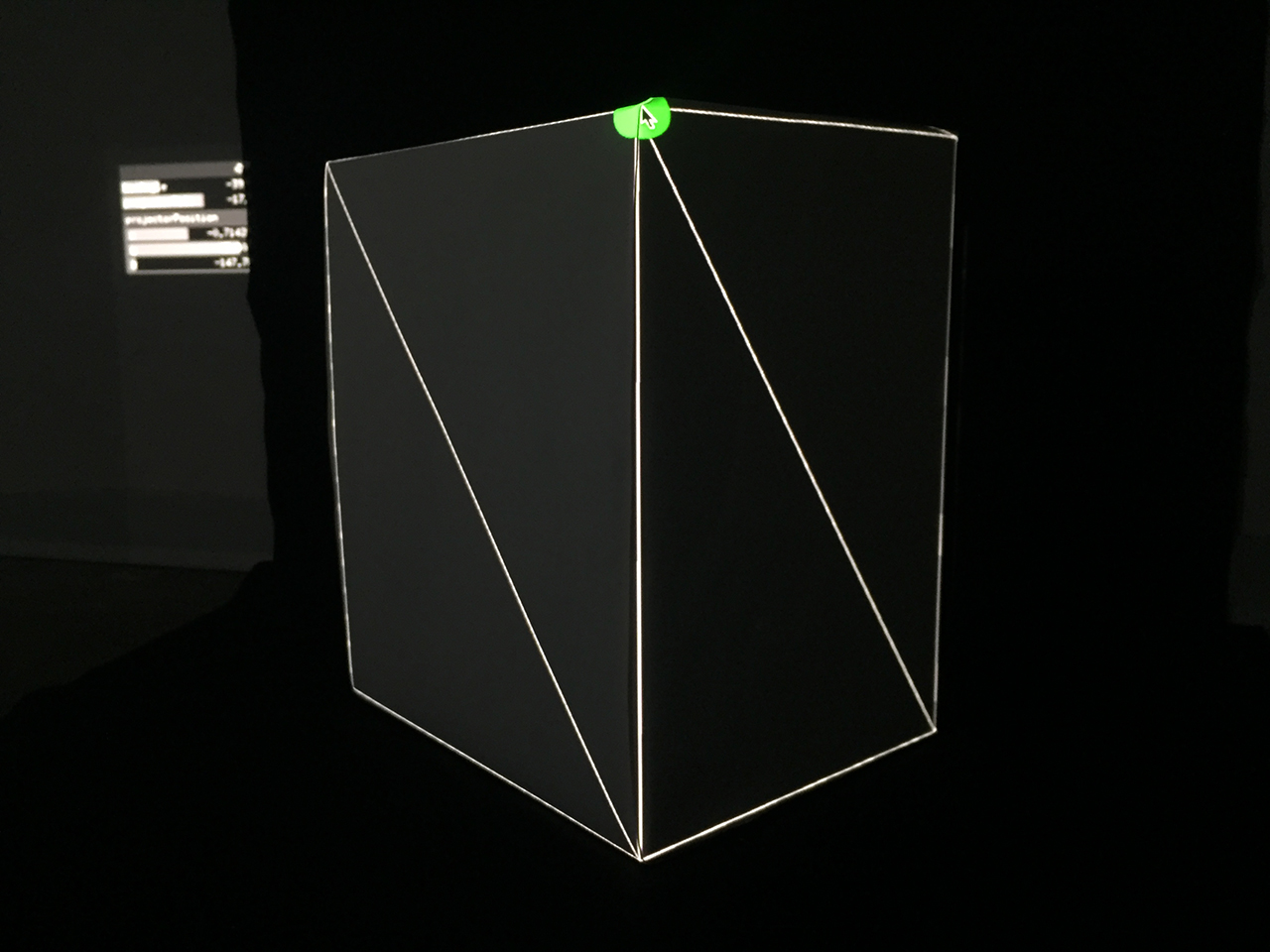

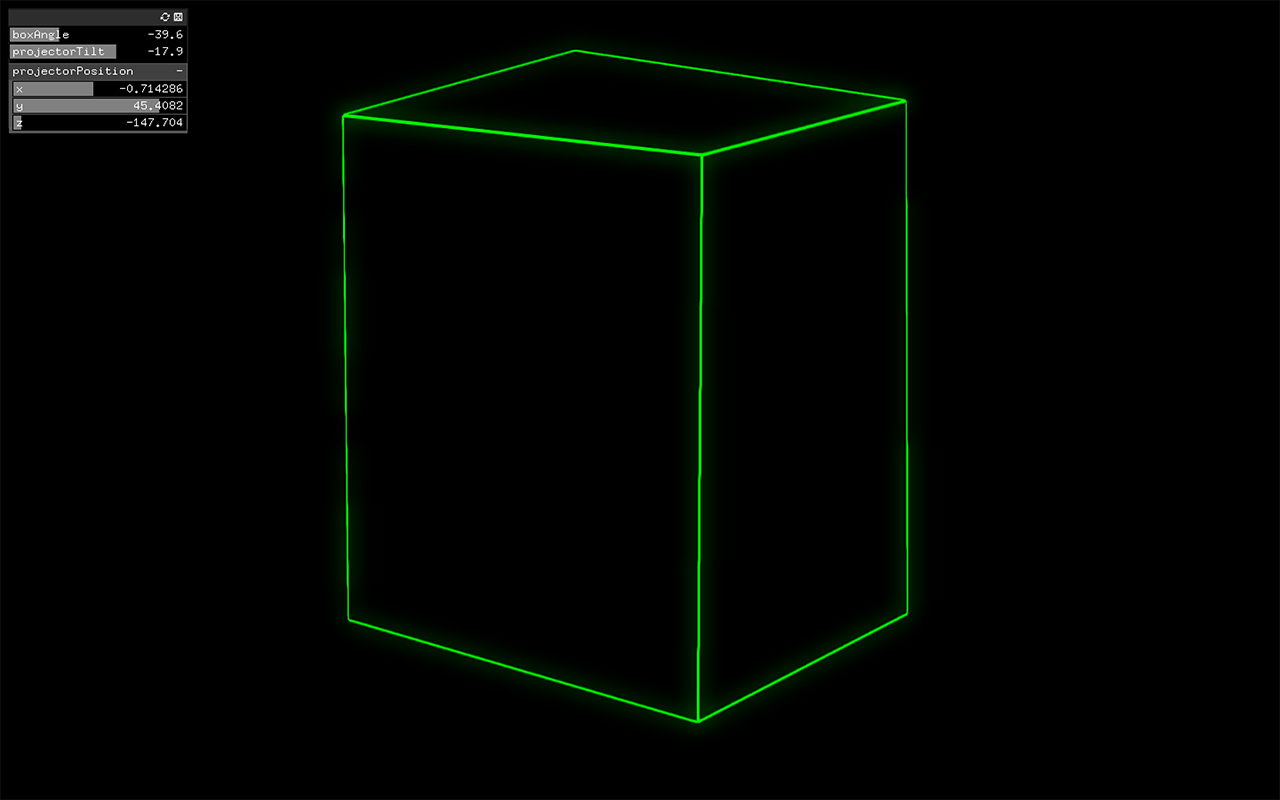

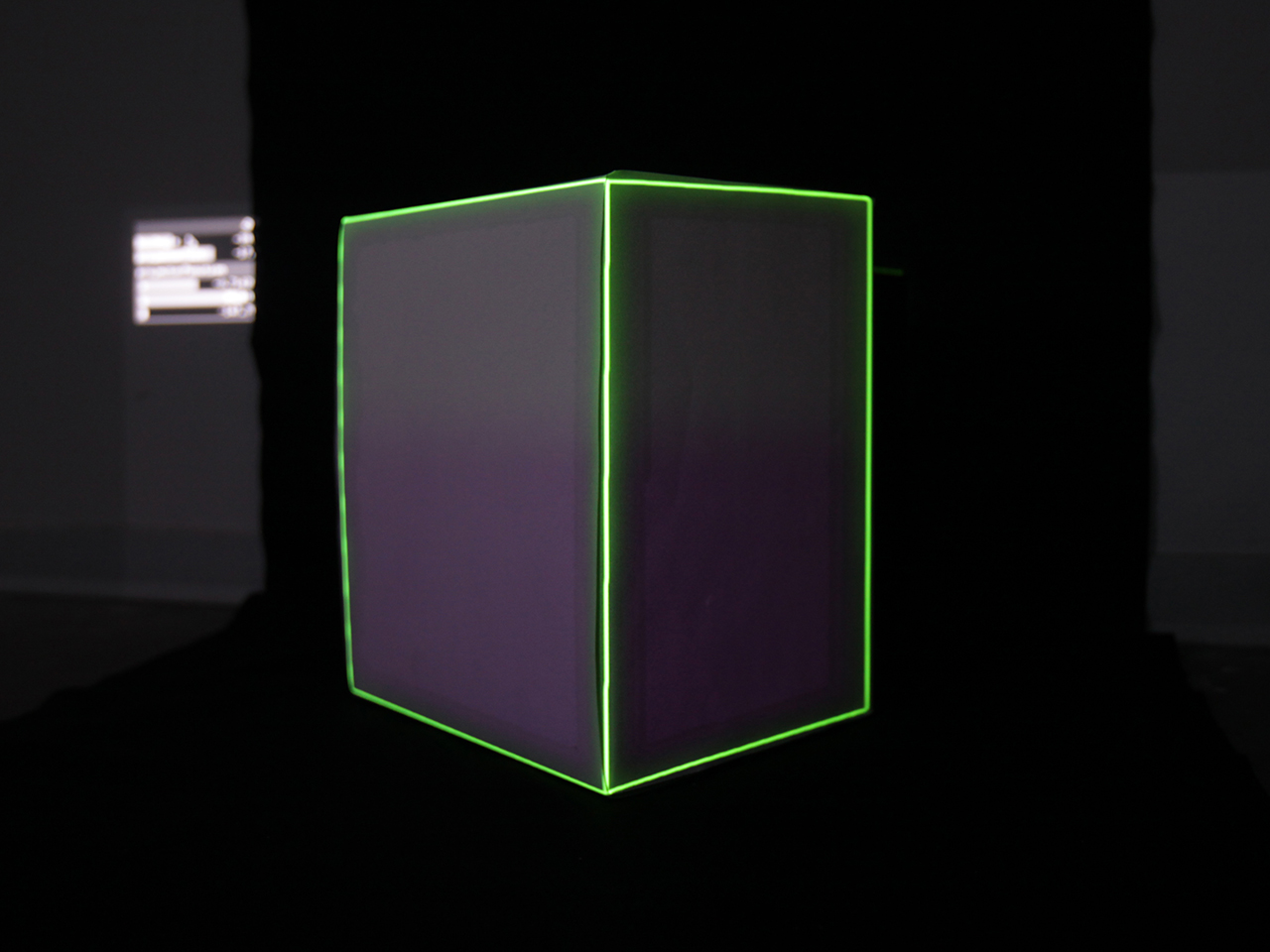

In its simplest form, projection mapping involves creating visuals that align with real world objects and then projecting them on to those objects. Normally, this process is done using computer graphics. These graphics be generated in real time, where the computer is rendering the graphics on the fly, similar to the way a computer game generates graphics. The advantage of this approach is that the visuals can be tweaked instantly (for instance, if the projection doesn’t align), it can be interactive and also generative (meaning that the graphics could be constantly changing without ever repeating).

The other approach is to pre-render a movie to project. This might be done with a software package such as Adobe After Effects. The advantage of this approach is that much more computationally intensive rendering algorithms can be used.

There are already quite a few articles out there that cover how to use various bits of software to do projection mapping. This article aims to cover some of the fundamental ideas behind projection mapping. In order to do this we are going to write our own projection mapping software from “first principles” using openFrameworks. However, the concepts presented here should be useful when approaching any type of projection mapping.

Prerequisites

Some familiarity with C++ and openFrameworks (OF) is necessary to get the most out of this article although there are example projects so, if you want, you can skip to the end of each step and compile and run the program. If you’ve never used openFrameworks before, then I’d advise you to have a look at some of the tutorials on the openFrameworks website.

Before we get started you’ll need to download OF 0.9 (available here). Once you’ve downloaded it, have a look at the IDE setup guide (linked to from this download page) for the operating system that you are using.

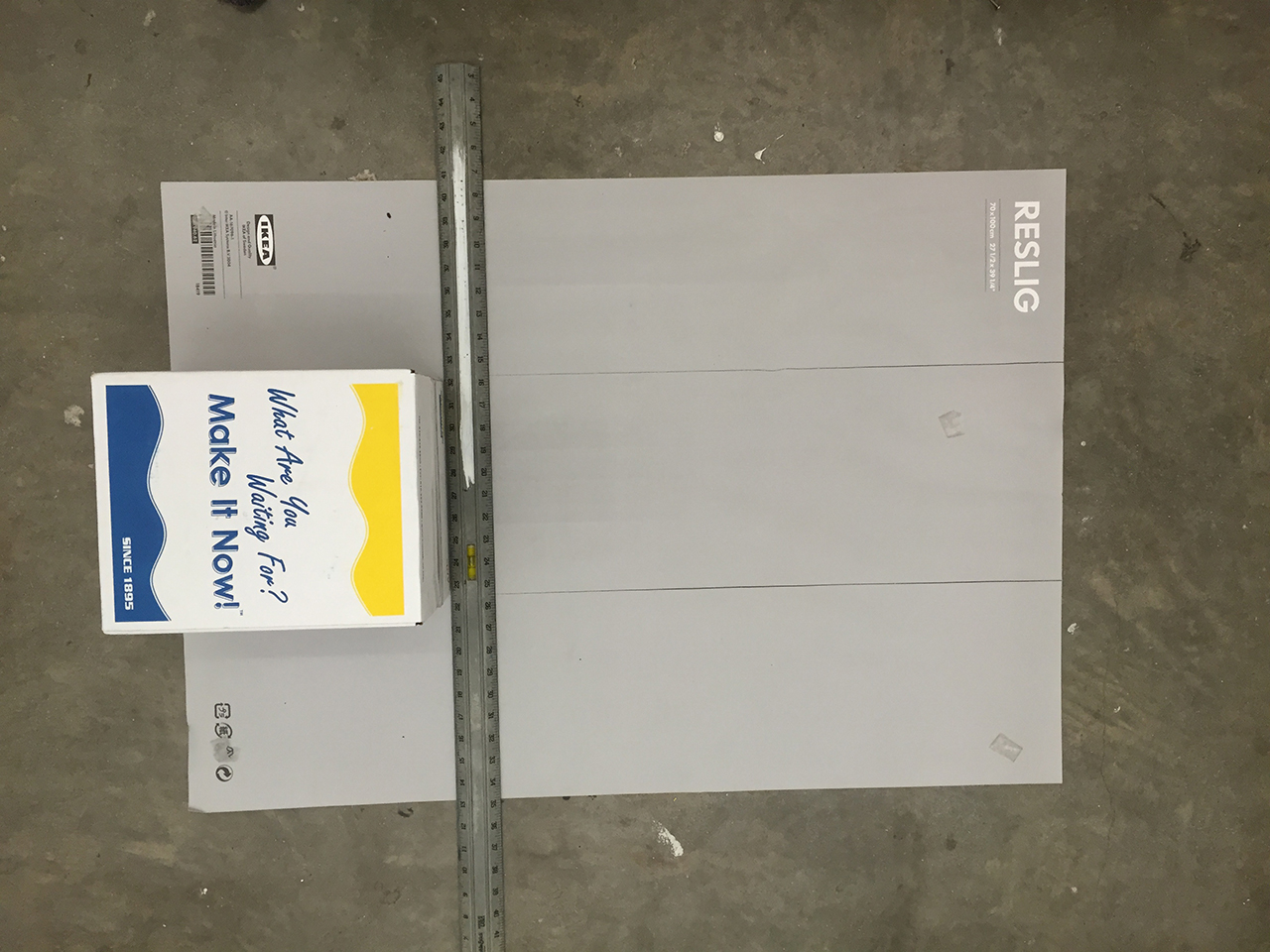

We will also use three OF addons: ofxGui (included with OF); ofxWarpableMesh (download here); and ofxPostProcessing (download here). Download the addons that aren’t included with OF and then unzip them to your OF addons folder.

The code examples for the article are available on my GitHub. They should be unzipped to the apps folder within OF so your folder structure will be…

YOUR_OF_FOLDER/apps/projectionmappingbasics

The name of the accompanying app for each step is given at the start of the step.