Materials

- Raspberry Pi 3 Model A+ single-board computer with 5V power supply and microSD card. (A RaspPi B model is too big to fit.)

- ReSpeaker 2-Mics Pi Hat microphone board seeedstudio.com

- Miniature speakers, 16mm, 50Ω (2) such as Visaton # 2816, Amazon #B003EA3VPO

- Wood screws, 2mm×10mm (4)

- Hookup wire

- JST 2.0 connector or 3.5mm stereo cable

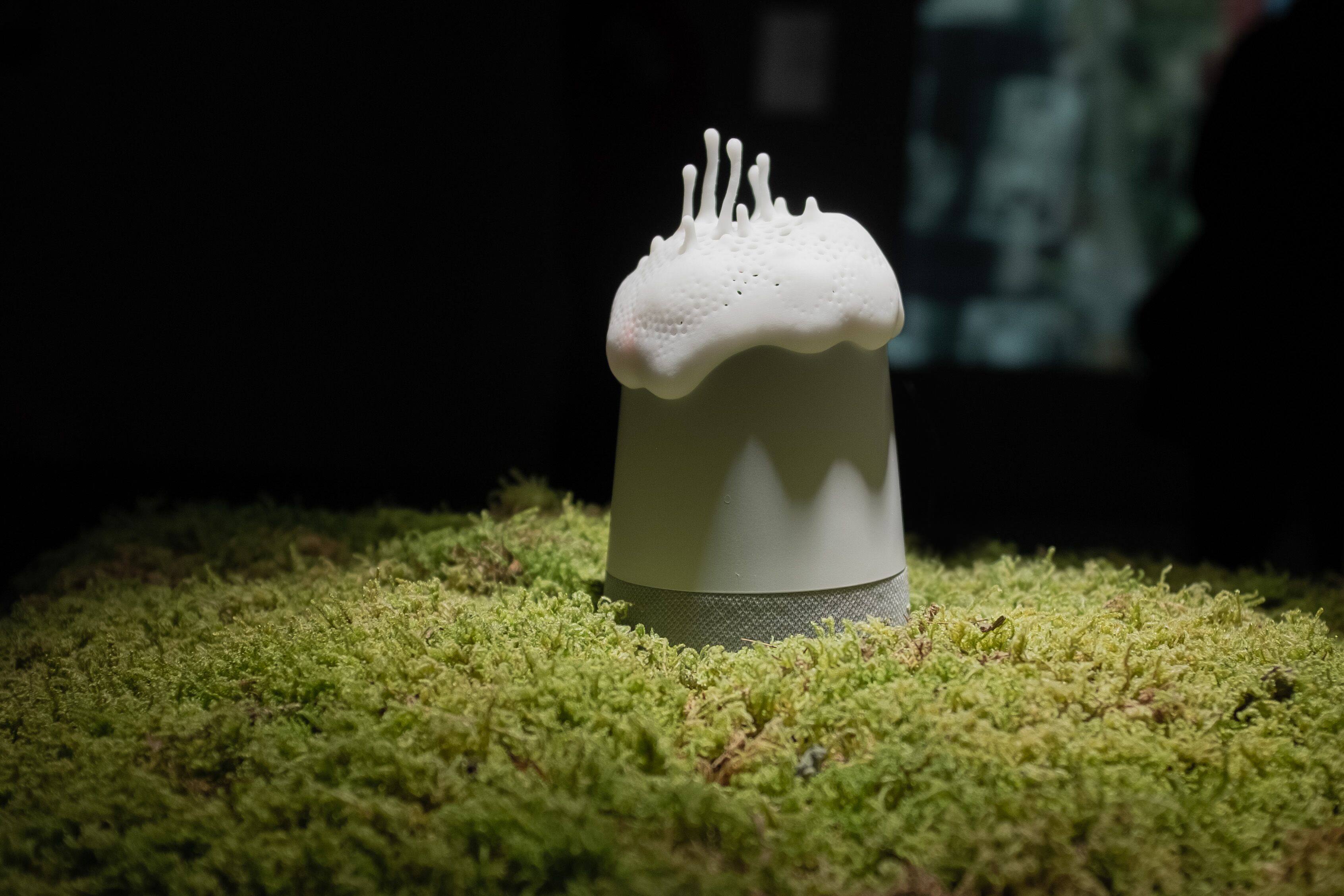

- 3D printed Alias housing Design inspired by parasitic fungus! Download the free STL files for printing.

WHAT IS ALIAS?

Alias is a teachable “parasite” that gives you more control over your smart assistant’s customization and privacy. Through a simple app, you can train Alias to react to a self-chosen wake-word; once trained, Alias takes control over your home assistant by activating it for you. When you’re not using it, Alias makes sure the assistant is paralyzed and unable to listen to your conversations.

When placed on top of your home assistant, Alias uses two small speakers to interrupt the assistant’s listening with a constant low noise that feeds directly into the microphone of the assistant. When Alias recognizes your user-created wake-word (e.g., “Hey Alias” or “Jarvis” or whatever), it stops the noise and quietly activates the assistant by speaking the original wake-word (e.g., “Alexa” or “Hey Google”).

From here the assistant can be used as normal. Your wake-word is detected by a small neural network program that runs locally on Alias, so the sounds of your home are not uploaded to anyone’s cloud.

A YEAR OF ALIAS

When we launched Alias back in January 2019 we had no idea what an amazing journey we were about to step into. It was a hobby project we’d worked on during evening hours for 6 months — a fun challenge and a way to express some thoughts and values around our relation to tech, smart homes, and the future.

It didn’t take long for online magazines and newspapers to catch on. Articles turned into interviews, and awards turned into exhibitions around the world. In the end, it was exactly what we hoped for: We never planned to bring another product into the world, but rather to inspire consumers to try an alternative solution and the idea that we, as the owners of our devices and our data, should have control over them.

Despite all the effort to make it a working prototype, Alias 1.0 was still not an out-of-the-box experience. The code was experimental and rough. So after popular feedback, we decided to rewrite the project from the ground up.

WHAT’S NEW (2.0)

When creating the early prototypes it became clear that there were no real-time sound classifiers fit for our purpose, so we decided to write our own software for sound analysis: we turned the sound into spectrograms and used Keras (a popular TensorFlow wrapper) to make the machine learning logic. Our big ambition with this first approach was to allow any sound to be used, not just words. While it was fun to learn about all this, we never really got it to work better than a proof of concept. So new we’re using an offline speech-to-text algorithm called PocketSphinx to do keyword detection. This has made Alias more reliable and easier to configure and train, relying on words from the dictionary.

Our solution of placing small speakers directly onto the microphone of the assistant inspired a lot of new ideas, and we realized that we could let the user configure custom shortcuts to tell Alias to send longer commands to the assistant. Previously we used an audio snippet of the original wake-word to wake up the assistant; our new design implements a text-to-speech algorithm (eSpeak) that makes it possible to not only change the wake-word but also specify exactly what commands to send. Now you can start a whole service from one wake-word. For example, you could say “Funky time!” and Alias can pass it along as “OK Google, play some funky music on Spotify.” There’s no limit to how many wake-words/commands the Alias can be configured with at once.

Having this new programmable voice that communicates with the assistant also made us think of different ways to create “data noise” to make the user more anonymous. So we’ve added a settings page where you can configure different parameters:

• We added a Gender option to let you choose what gender the assistant should perceive when Alias whispers command. By changing to the opposite gender you’ll be able to introduce false labeling into the assistant’s algorithm. This confusion might lead to interesting interactions and answers.

• We added a Language option to change the language of Alias when it speaks to your assistant — another layer of false labeling that makes the system label you with a different nationality. To use this feature, the command for the assistant has to be written in the selected language.

Besides that, we’ve also added options to optimize the performance of Alias, e.g. sensitivity, noise delay, and volume.

Follow the instructions at instructables.com/id/Project-Alias to build your new and improved Alias 2.0, and grab the latest code at github.com/bjoernkarmann/project_alias/tree/2.0. We’ve also provided a new .img file, so now installing is as easy as flashing an empty SD card.

CLOSING THOUGHTS

Alias was one of the first public hacks to approach the privacy issues on home assistants, but since early 2018 many more research and hobby projects have been tackling the issue, such as the DolphinAttack method that sends voice commands my modulating ultrasonic frequencies you can’t hear, or the recent Light Commands attack that uses lasers from a distance to manipulate the microphone.

It’s clear this problem is something we care about but have yet to find the right solution. The future is hopefully going to be a decentralized one with private smart homes. But until that happens it’s important to not accept the predefined lifestyle from Silicon Valley and to question whether it’s really as “smart” as they say!