Projects from Make: Magazine

Kinect-based Servo Control

This project explores techniques for real-time control of servo motors using the Kinect for Windows sensor.

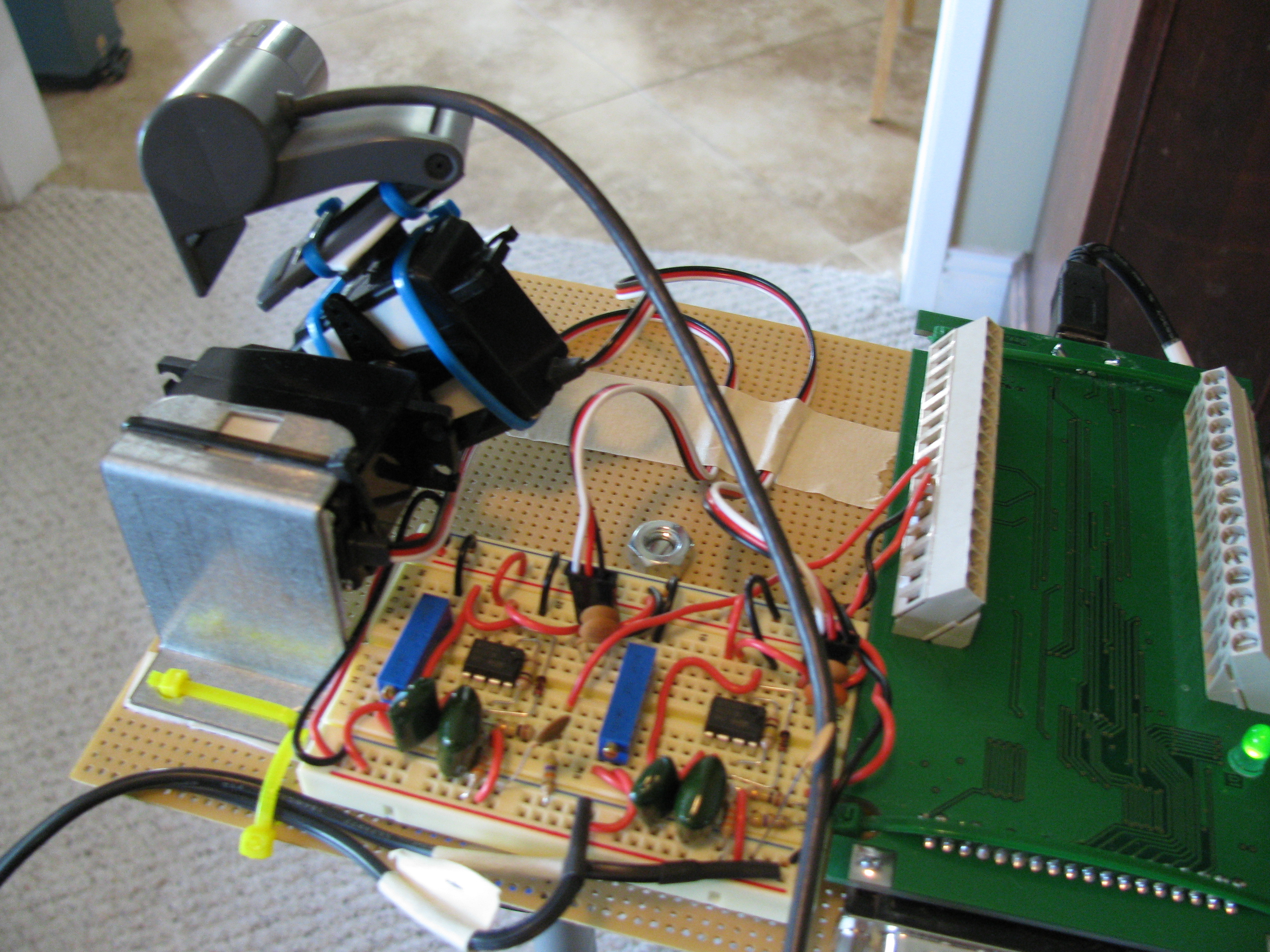

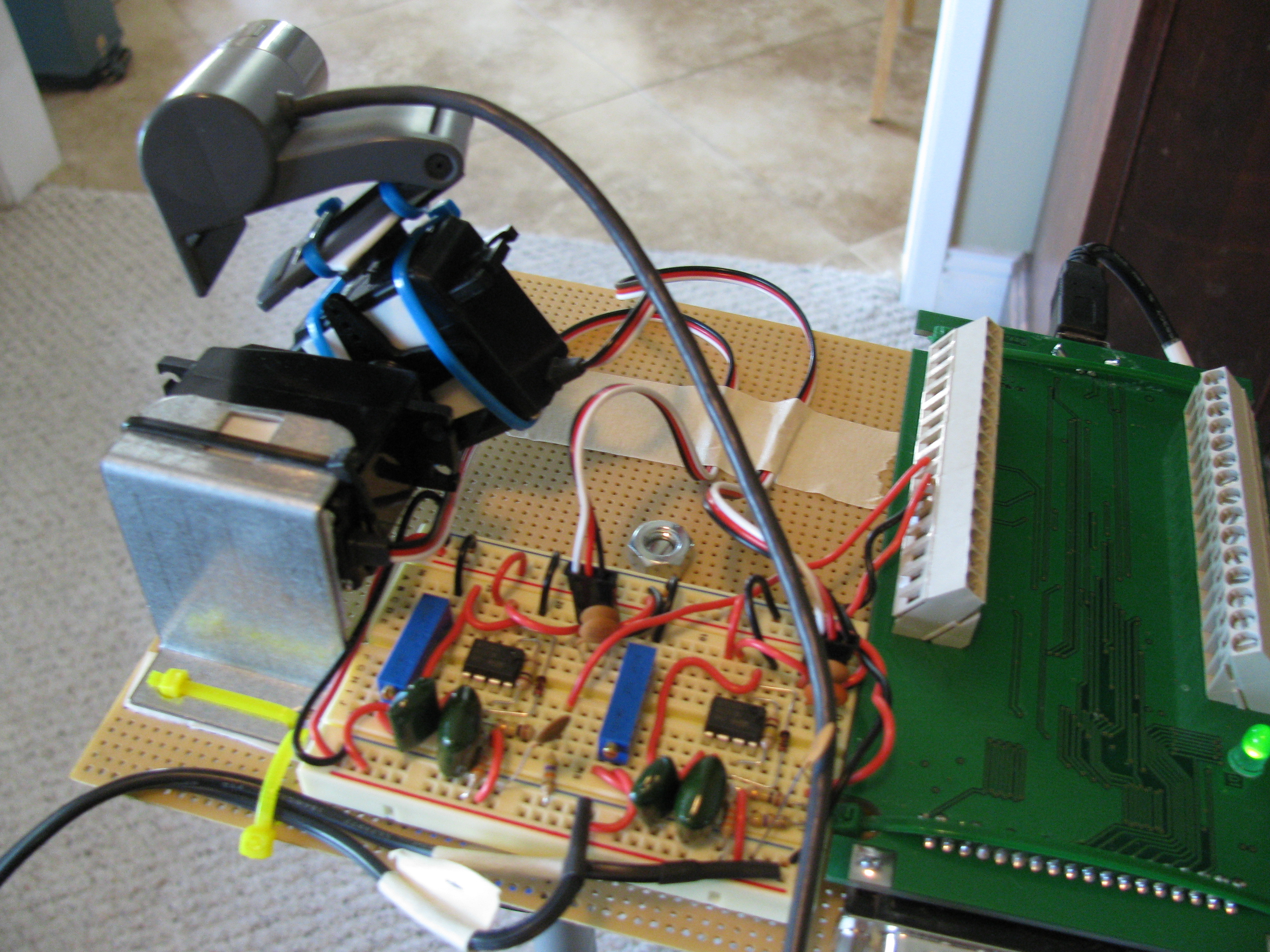

The first phase of this project is to demonstrate basic servo control using a Kinect sensor. Ultimately this project will include some interesting voice-recognition commands for controlling a robotized webcam. In the first iteration, the webcam is tie-wrapped to a couple of inexpensive servos that are in turn tie-wrapped together to provide two-axis positioning. The control circuit is a breadboarded voltage-to-PWM driven by two 12-bit ADCs on a USB-based data acquisition board. The software is written in C# using the Kinect for Windows v1.0 SDK.

For the first experiment, the webcam assembly was placed a few feet in front of the Kinect sensor. This video shows the response I was able to achieve using left-hand gesture tracking.

My breadboarded circuit was producing some unwanted jitter in the servos, but overall I was happy with the results.

Next, I decided to experiment with the newly released Kinect SDK v1.5 with face-tracking features. Here’s a link to a video.

The laptop I’m using in this experiment is incredibly under-powered for the job so the video results are poor, but I wanted to capture what I was able to cobble together in an afternoon. I still have some work to do on the servo responsiveness, but I like where this is going. The baseline project is part of the new SDK Developer Toolbox (Face Tracking Basics in C#).